This article was originally published as an interview conducted by the StableLab team. To review the full interview please see the original source: https://x.com/StableLab/status/1950990232008139233

Renee Davis has been deep in governance design for years. She founded TalenDAO, a pioneering research DAO focused on organizational science, helped launch Rare Compute Foundation, and now leads development at OpenMatter Network.

Her background is in computational social science, with a focus on simulations, agent modeling, and systems thinking. After years of seeing tokenomics used as a buzzword with little rigor behind it, she decided to build something better.

Her current obsession: turning tokenomics from pitch-deck aesthetics into models you can run, stress-test, and iterate on.

From Theory to Simulation: Breaking Down the Tool

Renee’s framework isn’t a whitepaper or a spreadsheet, it’s a working simulation stack. Built in Python using Streamlit, it includes three interconnected modules designed to test token mechanics under real-world uncertainty.

Each simulation targets a different layer of token design; supply, emissions, and infrastructure cost. Together, they give protocol teams a way to pressure-test assumptions before launch and adapt to dynamic market conditions, not idealized ones.

1. Token Supply Simulator

This module models how a token behaves across time; price, supply, risk, liquidity, and adoption under probabilistic stress.

It captures:

Market cap and price trajectories

Circulating vs staked supply

Emissions, burns, and deflationary dynamics

Risk-adjusted performance via Sharpe ratio

Adoption curves and liquidity constraints

A key innovation is the dynamic emission function: token emissions increase with protocol profits, but only up to a point. After that, emissions decrease. This inverted-U curve prevents runaway inflation and rewards actual usage.

“We want to be more like Bitcoin and less like Ethereum,” Renee explained. “Emission should be responsive, not just linear.”

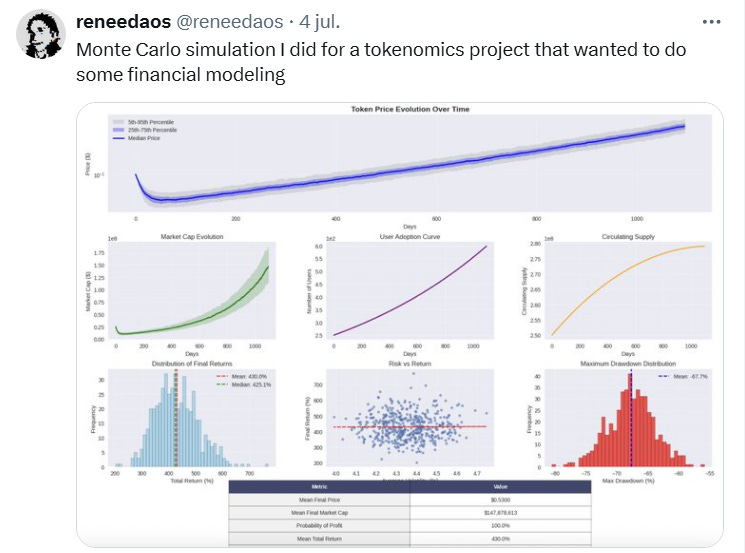

The model also integrates randomness into each run using Monte Carlo simulation, allowing teams to explore the distribution of possible outcomes, not just the average scenario.

Figure: Example output from Renee's Monte Carlo simulation tool, showing token price evolution, user adoption, risk-return scatter, and more. Used to pressure-test financial assumptions under real-world volatility.

2. Emission Rate Simulator

If the first simulation deals with how supply behaves, this one governs how it gets distributed and when.

It allows teams to:

Define profit-based emission schedules

Set investor and team vesting parameters

Calibrate release slopes and cliffs

Allocate emissions across categories (staking, public sale, ecosystem treasury)

Instead of fixed, time-based unlocks, emissions here are tied to actual protocol performance. This means tokens get distributed when the protocol is working, not just because a date passed.

The engine uses geometric Brownian motion, a modeling method common in traditional finance, to simulate volatility in profit projections over multi-year timeframes. It brings financial realism to token design.

“If you’re only emitting tokens when the protocol’s doing well, you’re not just reducing inflation, you’re aligning incentives with reality.”

The result: a system that adjusts over time, favors early adopters if designed that way, and helps teams visualize trade-offs between long-term sustainability and short-term momentum.

3. Gas Cost Estimator

This is the simplest module, but crucial for compute-heavy protocols. It models the cost of deploying workloads in decentralized compute environments like Open Matter Network.

It accounts for:

Resource requirements (e.g. GPU, memory, bandwidth)

Hardware pricing from node operators

Electricity costs and provider-specific fees

Estimated total cost per container deployment

Think of it as AWS pricing logic for permissionless infrastructure. It’s particularly useful for protocols running zk workloads, scientific research, or AI inference, where cost modeling isn't optional.

Together, these three modules form a composable toolkit for DAO operators, token engineers, and protocol designers who want to move beyond storytelling and into simulation.

“Too many token models assume the future,” Renee said. “We simulate it; messy, volatile, and all.”